Vietnamese Universal Dependency Parsing

Important dates

- Sep 10, 2020: Registration open

- Nov 1, 2020: Registration closed

- Nov 7, 2020: Training data released

- Dec 5, 2020: Test set released

- Dec 6, 2020: Test result submission

- Dec 15, 2020: Technical report submission

- Dec 18, 2020: Result announcement (workshop day)

Dependency parsing is the task of determining syntactic dependencies between words in a sentence. The dependencies include, for example, the information about the relationship between a predicate and its arguments, or between a word and its modifiers. Dependency parsing can be applied in many tasks of natural language processing such as information extraction, coreference resolution, question-answering, semantic parsing etc.

Many shared-tasks on dependency parsing have been organized since 2006 by CoNLL (The SIGNLL Conference on Computational Natural Language Learning - https://www.conll.org/), not only for English but also for many other languages in a multilingual framework. From 2017, a Vietnamese dependency treebank of 3,000 sentences is included for the CoNLL shared-task “Multilingual Parsing from Raw Text to Universal Dependencies”. However, this available dependency treebank for Vietnamese is still small and its automatic conversion from the UD v1 to UD v2 contains several errors.

In the framework of the VLSP 2019 workshop, we proposed the first shared-task on Vietnamese dependency parsing in order to promote the development of dependency parsers for Vietnamese. A UD v2 corpus of 4000 Vietnamese sentences has been released for this shared task. This year, we are organizing for the second time the shared-task on dependency parsing.

A training corpus of approximately 10,000 dependency-annotated sentences will be given to participants one month before the test phase. For the test, participant systems will have to parse raw texts where no linguistic information (word segmentation, POS tags) is available.

The set of dependency relations is defined according to the universal dependencies (cf. UD v2 at https://universaldependencies.org/u/dep/index.html). A guideline of Vietnamese dependency annotation will be published for reference.

Data format

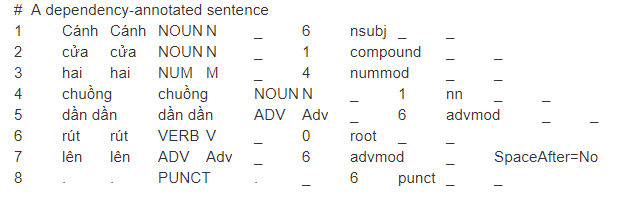

The dependency-annotated data must be encoded in CoNLL-U format (please refer to https://universaldependencies.org/format.html for a detailed description) as below.

Annotations are encoded in plain text files (UTF-8, normalized to NFC, using only the LF character as line break, including an LF character at the end of file) with three types of lines:

- Word lines containing the annotation of a word/token in 10 fields separated by single tab characters; see below.

- Blank lines marking sentence boundaries.

- Comment lines starting with hash (#).

Sentences consist of one or more word lines, and word lines contain the following fields:

- ID: Word index, integer starting at 1 for each new sentence; may be a range for multiword tokens; may be a decimal number for empty nodes (decimal numbers can be lower than 1 but must be greater than 0).

- FORM: Word form or punctuation symbol.

- LEMMA: Lemma or stem of word form (In Vietnamese the lemma is the same as the wordform)

- UPOS: Universal part-of-speech tag. X if not available.

- XPOS: Vietnamese part-of-speech tag; underscore if not available.

- FEATS: Morphological features; underscore if not available.

- HEAD: Head of the current word, which is either a value of ID or zero (0).

- DEPREL: Universal dependency relation to the HEAD (root iff HEAD = 0) or a defined language-specific subtype of one.

- DEPS: Enhanced dependency graph in the form of a list of head-deprel pairs.

- MISC: Any other annotation.

Example:

Training data

Participants will be provided with a Vietnamese dependency treebank containing about 10,000 sentences. Gold standard word segmentation and part-of-speech (POS) tags are available in the training set.

Test data

The test data will be given in raw text. Participant systems should return the dependency parsing result of the test data in CoNLL-U format.

Evaluation metrics

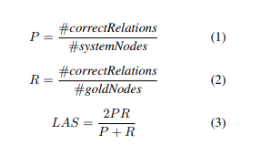

Participant system is evaluated using the LAS (Labeled Attachment Score) by comparing the gold relations of the test set and relations returned by the system. The LAS is given as follows:

We use the evaluation script published at the CoNLL 2018 (https://universaldependencies.org/conll18/conll18_ud_eval.py).

References

[1] https://universaldependencies.org/conll18/

[2] Proceedings of the CoNLL 2018 Shared Task: Multilingual Parsing from Raw Text to Universal Dependencies (https://www.aclweb.org/anthology/K18-2)